Taking Uncertainty Seriously: Part 1

Key Takeaways

- Uncertainty Is the Real Divide: The qualitative versus quantitative debate is fundamentally about whether uncertainty is hidden or made explicit.

- Qualitative Methods Excel at Triage: They help prioritize which risks deserve attention but are not suited to supporting trade-offs or investment decisions.

- Risk Matrices Mask Uncertainty: Heat maps and ordinal labels create the appearance of certainty while avoiding hard questions about range and impact.

- Quantitative Risk Aims to Be Less Wrong: Effective quantitative approaches surface uncertainty through honest ranges rather than false precision.

- Qualitative and Quantitative Are Complementary: Judgement helps decide what matters; quantification helps decide what to do about it.

Deep Dive

If your exposure to quantitative risk has ever felt a little intense, you’re not imagining it.

For many people in GRC, the first encounter with “quant risk” does not come through a calm explanation or a practical example. It shows up instead as a conference talk, a blog post, or a hallway conversation where someone is clearly fed up. The tone can sound fervent, sometimes even evangelical. If you are standing outside that world, it can feel off-putting, not because the ideas are obviously wrong, but because the energy does not match how risk work usually feels day to day.

Risk work, as most of us experience it, is pragmatic and incremental, shaped by constraints, politics, and the reality that you are rarely starting from a clean slate. So, when someone shows up sounding like they want to burn the risk matrix to the ground, it is natural to recoil and wonder what all the fuss is about.

If you have ever felt confused, discouraged, or quietly skeptical in those moments, that reaction makes sense. It is worth slowing down long enough to understand why this conversation sounds the way it does.

Underneath the tone, there is a real and important disagreement hiding in plain sight.

Most of us never learned the difference between qualitative and quantitative risk in any deliberate way. We absorbed it indirectly. For some, it came from a framework diagram that labelled one box “qualitative” and another “quantitative.” Others encountered it in a certification book, like the CISSP, that introduced formulas without much context. Still others picked it up from a passing conversation that sounded authoritative enough to stick.

Over time, those fragments hardened into folk definitions:

- Qualitative risk is subjective but practical.

- Quantitative risk is objective but unrealistic.

- Quant requires perfect data, heavy maths, and specialized tools.

- Most organizations are not ready, so qualitative is “good enough.”

None of that came from practice. It came from exposure without experience. The result is that nearly everyone in GRC has an opinion about quantitative risk, but very few people were ever given a usable mental model for what it is.

This would not matter much if the distinction were academic. But it is not. It shows up every time a risk report lands in front of an executive and fails to change a decision. Every time a heat map sparks debate about colors instead of trade-offs. Every time we sense, quietly, that we are doing a lot of work without moving the organization very far.

Here is the part that tends to get lost. The real difference between qualitative and quantitative risk is not words versus maths. It is not subjective versus objective. It is definitely not simple versus advanced. The real difference is how uncertainty is treated.

Qualitative approaches, as they are commonly practiced, hide uncertainty. They take a messy, ambiguous reality and squeeze it into categories that feel decisive. High, medium, low. Red, yellow, green. Those labels are comforting. They create the appearance of clarity. But they do it by hiding what we do not know.

Quantitative approaches, when done well, do the opposite. They surface uncertainty. They make it visible, explicit, and bounded. The aim is not to eliminate uncertainty, but to work with it. They replace false certainty with honest ranges. They do not promise precision. Instead, they aim to be less wrong.

This is where much of the emotion in the debate comes from.

People who advocate for quantitative risk are not disruptive because they love maths. They are disruptive because they have spent years watching organizations make high-stakes decisions using models that collapse the moment someone asks simple follow-up questions: How much worse? Compared to what? What’s the worst that could happen?

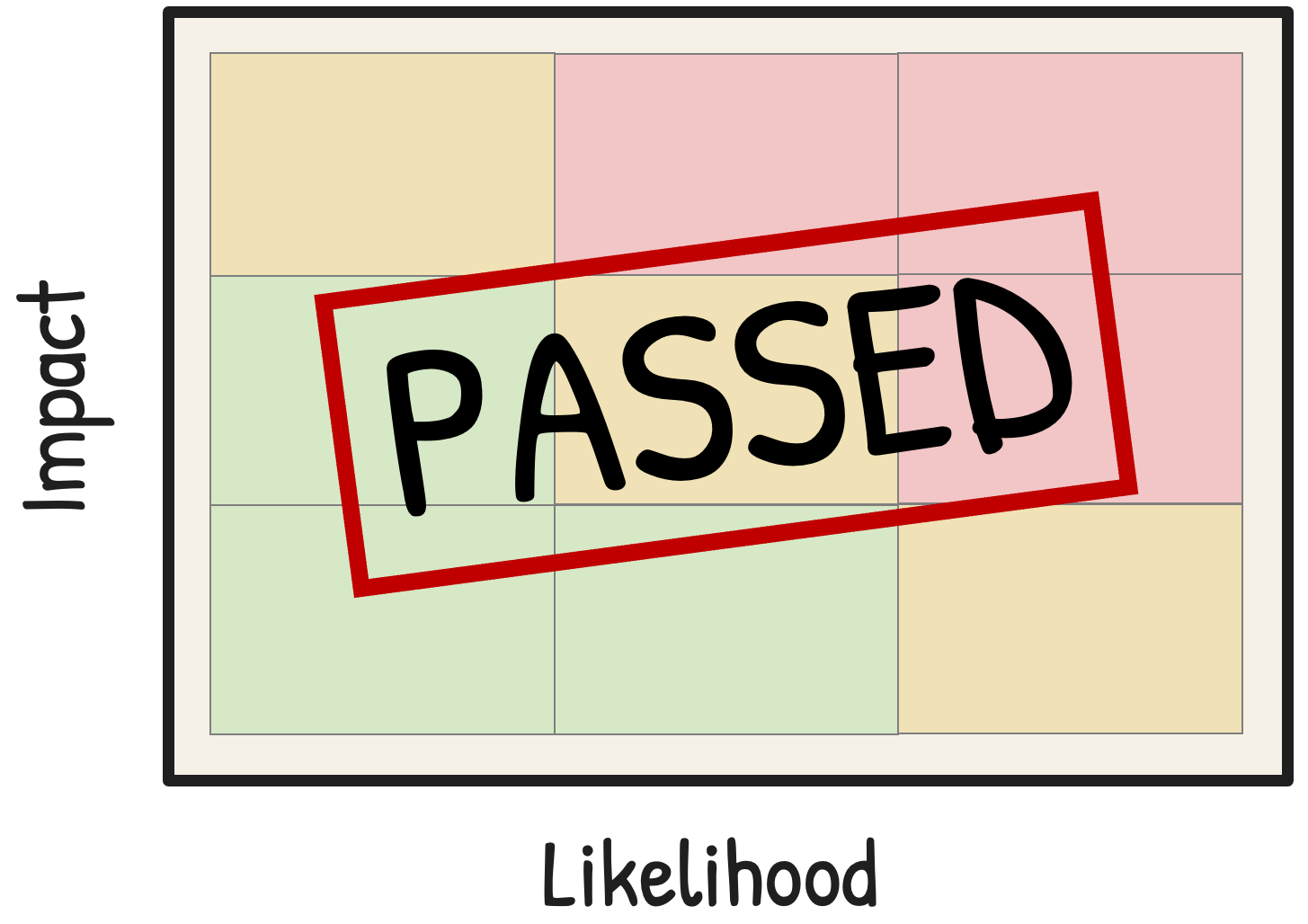

Risk matrices, in particular, tend to provoke this reaction. Not because qualitative judgement is bad, but because matrices quietly pretend to be decision models. They take ordinal labels and ask them to carry quantitative weight. They hide hard trade-offs behind soft categories, then dare no one to interrogate the result. After all, it passed an audit.

After you have watched that happen enough times, it is hard not to sound frustrated about it. This is where I want to be very precise, because the distinction matters. I am not against qualitative judgement.

You do not need a quantitative risk assessment to know that ransomware deserves more attention than asteroid strikes. That is a qualitative judgement call, and it is a good one. Qualitative thinking excels at triage. It helps us decide where to look. It filters a vast risk universe down to a manageable set of scenarios worth attention.

Where things break is when we take the outputs of that judgement, colors, labels, rankings, and ask them to do quantitative work. When we expect them to support investment decisions, compare trade-offs, or justify outcomes. That is not a failure of judgement. It is a mismatch between tool and task.

The problem is not that qualitative methods exist. The problem is that we keep asking them to behave like a measurement system.

Once you see that, much of the noise in this debate quiets down. The argument stops being about which camp you are in and starts being about fitness for purpose. Qualitative judgement helps you decide what matters. Quantitative models help you decide what to do about it. You do not have to choose between them.

If all of this still feels abstract, that is okay. This is not the part where you are supposed to run an analysis or learn a method. This is the part where we clean up the mental model most of us inherited and replace it with something more honest.

The rest of this series is about small steps. Practical shifts in how we think about risk that improve conversations long before anyone runs a simulation. No maths heroics or revolutions. Just a willingness to stop pretending we are certain when we are not.

In the next piece, we will start with the smallest possible upgrade you can make once you stop thinking of quantitative risk as a maths problem and start thinking of it as an honesty problem.

Tony Martin-Vegue is an advisor, author, and independent researcher focused on quantitative cyber risk and decision-making under uncertainty. He has worked with Fortune 500 companies and global technology firms to design and operationalize quantitative risk programs, helping organizations move beyond qualitative reporting toward decision-grade risk analysis for executives and boards.

He is the author of the upcoming book From Heatmaps to Histograms: A Practical Guide to Cyber Risk Quantification (Apress, early 2026), which distills lessons from real-world program builds, thousands of risk analyses, and years of applied research into how organizations make risk decisions.

The GRC Report is your premier destination for the latest in governance, risk, and compliance news. As your reliable source for comprehensive coverage, we ensure you stay informed and ready to navigate the dynamic landscape of GRC. Beyond being a news source, the GRC Report represents a thriving community of professionals who, like you, are dedicated to GRC excellence. Explore our insightful articles and breaking news, and actively participate in the conversation to enhance your GRC journey.

Sponsored by

.svg)

.svg)